AI: MCP the one feature to rule them all?

If you haven’t been living under a rock, you’ve almost certainly heard about or seen a mention of MCP – Model Context Protocol. But is this the shiny solution to everything when creating you AI agents? While it is a good feature there are tradeoffs to concider, so continue reading to get a deeper understanding.

What are MCP

This is a fairly new feature, introduced by Anthropic in late 2024 as an open standard. It aims to standardize how AI systems, particularly large language models (LLMs), integrate and share data with external tools and data sources. Think of it like a universal «USB port» for AI. The goal was to solve the problem of building custom, one-off integrations for every single tool you wanted an AI to interact with. This was a cumbersome M x N problem (M models times N tools). MCP simplifies this to an M + N problem. Where both the AI and the tools just need to conform to a single protocol. Since its introduction, major players like OpenAI and Google DeepMind have also adopted it.

MCP works on a client-server model. The host application (like Copilot Studio) acts as the client, and the external data source or tool (like a database or a web search API) is the server. The server exposes its capabilities. And gives its resources, tools, and prompts to the client. This is allowing the AI to discover and use them. This is what gives your agent superpowers. Enabling it to access real-time information, perform actions, and go beyond its initial training data. For a more detailed walktrough of MCP you can read my earlier post on this here.

MCP servers configurations

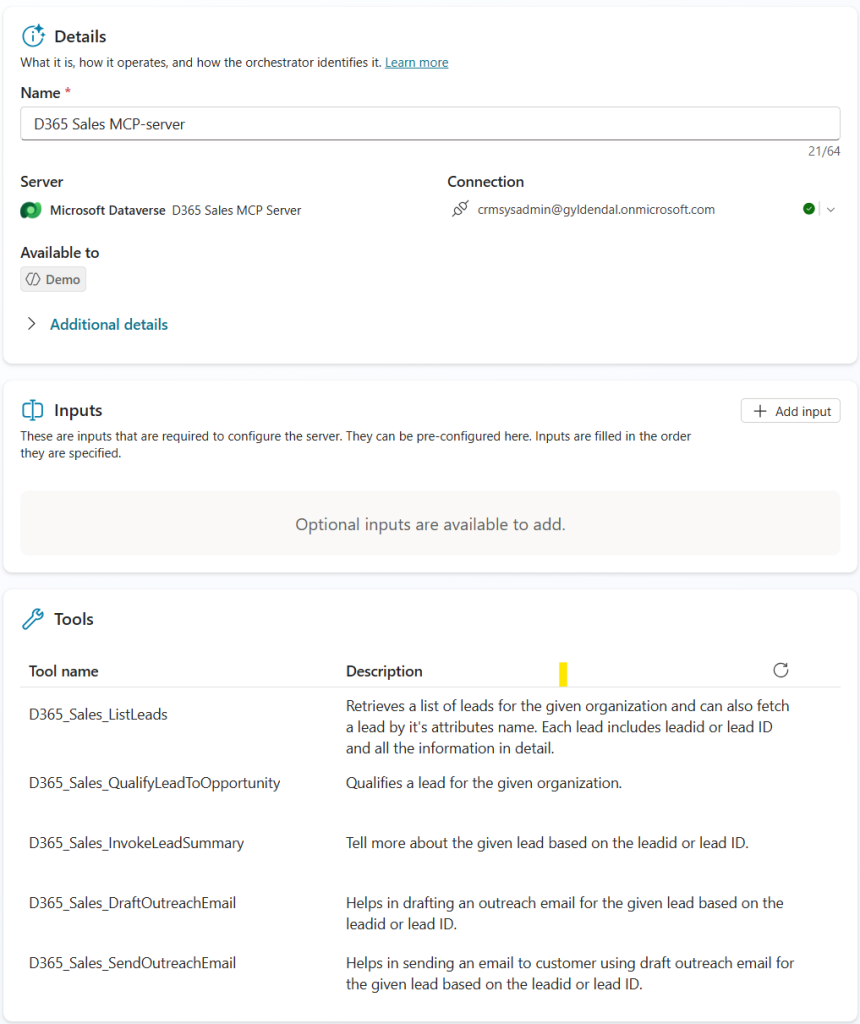

When you create a MCP server you package different tools into the configuration. So instead of having to connect to each of the tools you need from a spesific provider you connect to the MCP server. And give the promt directly to the MCP in the call. And they choose what tool gives a good answer if any. The response might be a combination of the configured tools in the MCP server as well.

Here is an example of the D365 Sales MCP-server, as you can se it have 5 tools configured that are spesifically created to help with the sales side of Dynamics.

Cool! So should I always use MCP over tools?

While it’s a fantastic feature that gives your agent superpowers. When you start to look at the cost, it’s not always the optimal choice. At least not yet. Continue reading to understand why

The Cost of a Conversation

In Copilot studio and many other systems, token usage directly translates to cash. With many AI providers, you’re billed per token, and the amount can vary significantly depending on the model you use. A complex query that uses a lot of tokens can quickly add up.

Generative orchestration is a approach, where your AI agent uses an LLM to dynamically choose which action to use for different parts of a user’s question. I have covered generative orchestation in my earlier post which you can find here: https://sjoholt.com/classic-vs-generative-orchestration-in-copilot-studio/

For actions (now called tools in Copilot Studio), your agent has a clear description of what they do before it calls them. This means the AI has enough information to decide if a particular tool is the right one to use before it incurs the cost of calling it.

For MCP, however, the agent doesn’t have the content before calling the server. It only has a description of the server’s capabilities. If you have several MCP servers configured, the agent might run through each of them to get the necessary context. This is potentially burning a lot of expensive tokens. It’s like calling every single person in your contact list to ask a question instead of knowing exactly who to call.

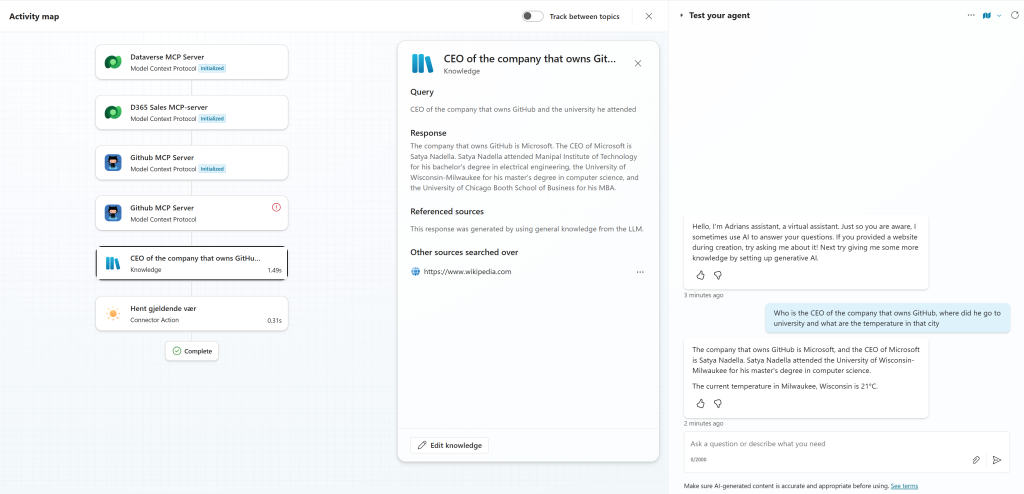

Using the same input as i did in the last article «Who is the CEO of the company that owns GitHub, where did he go to university and what are the temperature in that city»

The last time it went to the two configured actions and provided us with the answer. While stil giving the same answer now, it goes through all our MCP-servers before calling the tools actually needed to give the answer.

Key takeaways

the key takeaway is to carefully consider the trade-offs. While MCP represent a significant step forward in building powerful and versatile AI agents. By orchestrating a symphony of tools and data sources, MCPs empower agents to go beyond the limitations of a single large language model. The key takeaway for any developer or business is to carefully consider the trade-offs. While MCPs unlock a new level of capability, they also require careful planning and configuration to manage complexity and cost. Ultimately, embracing this architecture and choices is a powerful way to expand an agent’s potential and create truly intelligent, adaptive systems and have a clear focus on the cost.